Artificial intelligence & robotics

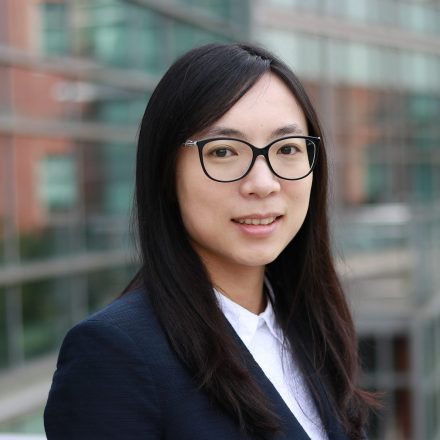

Bo Li

By devising new ways to fool AI, she is making it safer.

China

Yanan Sui

Using artificial intelligence to help paralyzed patients stand up again

Japan

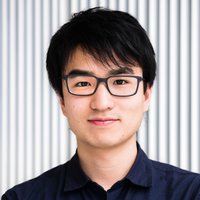

Ken Nakagaki

Inventor develops innovative user interfaces. Fun filled ideas pave the way for new relationships between people and machines.

China

Yifan Li

Created lidar products that lead the global autonomous driving industry

Japan

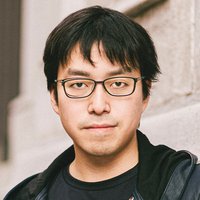

Yusuke Narita

A "revolutionary" working across numerous nations, cultures, and industries based on the idea that data and algorithms can change system infrastructure.